KDD2020 Tutorial on Interpreting and Explaining Deep Neural Networks: A Perspective on Time Series Data

1:00 - 4:00 PM, 23rd, August 2020

Entire Tutorial (Part1~Part3)

Presentation Slides: Part 1 , Part 2 , Part 3

Introduction

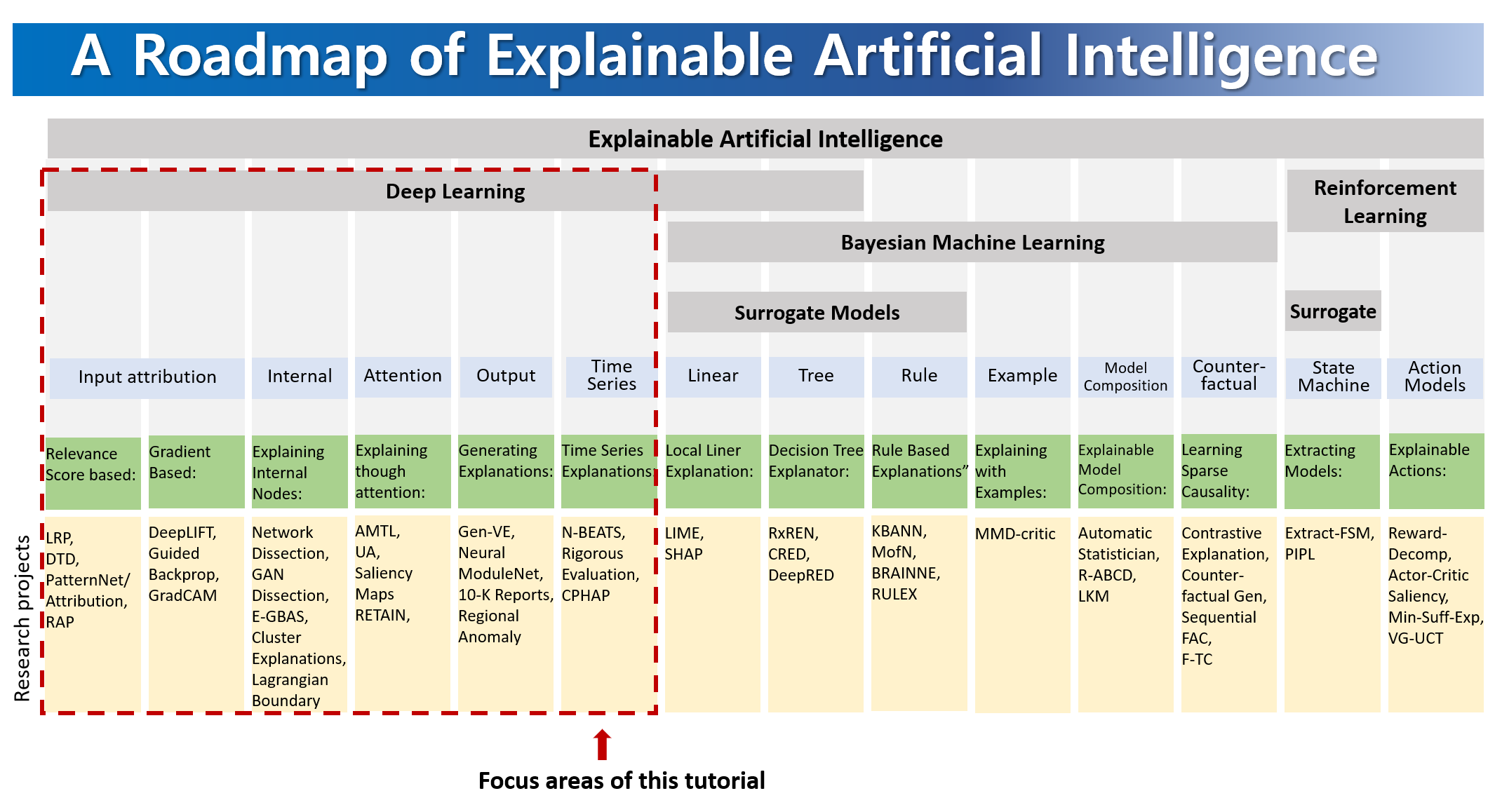

Explainable and interpretable machine learning models and algorithms are important topics which have received growing attention from research, application and administration. Many advanced Deep Neural Networks (DNNs) are often perceived as black-boxes. Researchers would like to be able to interpret what the DNN has learned in order to identify biases and failure models and improve models. In this tutorial, we will provide a comprehensive overview on methods to analyze deep neural networks and an insight how those XAI methods help us understand time series data.

Program

Tutorial outline

| Time | Tutor | Detail |

|---|---|---|

| 50 min | Jaesik

Choi (KAIST) |

Overview of Interpreting Deep Neural Networks |

| 50 min | Interpreting Inside of Deep Neural Networks | |

| 50 min | Explaining TimeSeries Data |

Overview of Interpreting Deep Neural Networks

At first, we will provide a comprehensive to explain fundamental principles in interpreting and explaining deep neural networks. The topic of the talk may include relevance score based methods [Layer Relevance Propagation (LRP), Deep Taylor Decomposition (DTD), PatternNet, and RAP] and gradient based methods [DeConvNet, DeepLIFT, Guided Backprop]. We will also present a new perspective by presenting methods on Meta-Explanations [CleverHans] and Neuralization methods [ClusterExplanations].

Interpreting Inside of Deep Neural Networks

In this section, we will present methods to interpreting internal nodes of deep neural networks. We will start the session by introducing method to visualize channels of Convolutional Neural Networks [Network Dissection] and Generative Adversarial Networks [GAN Dissection]. Then, We will present methods [Convex Hull, Cluster Explanations, E-GBAS]to analyze internal nodes of DNNs by analyzing a set of decision boundaries. When allowed, We will briefly overview the methods to explain DNNs by using attention methods.

Explaining Time Series Data

In the last section, we will introduce recent explainable methods on Time Series Domain. We will introduce [N-BEATS], a framework performing regression tasks and providing outputs that are interpretable without considerable loss in accuracy. [CPHAP] interprets the decision of temporal neural networks by extracting highly activated periods. Furthermore, this clustered results of this method provide an intuition of understanding data mining. Finally, we will present a method [Automatic Statistician] that predicts time series with a human-readable report, including the reason for prediction.

Tutors

Jaesik Choi is a director of the Explainable Artificial Intelligence Center of Korea since 2017. He is an associate professor of Graduate School of Artificial Intelligence at Korea Advanced Institute of Science and Technology (KAIST). He received his BS degree in computer science and engineering at Seoul National University in 2004. He received his PhD degree in computer science at the University of Illinois at Urbana-Champaign in 2012.

Email: jaesik.choi@kaist.ac.kr

Homepage: http://sailab.kaist.ac.kr/jaesik

Affiliation: Korea Advanced Institute of Science and Technology (KAIST)

Address: 8, Seongnam-daero 331,18F KINS Tower,Bundang, Seongnam, Gyeonggi, Republic of Korea

Prerequisites

Target Audience: Target audience of this tutorial is a general audience who are familiarized in basics of machine learning and statistics. Thus, a person with knowledge of master-level graduate student will not have difficulty to follow the tutorial.

Equipment attendees should bring : Nothing

Reference

22. [10-K Reports] , Y. Chun at el, “Predicting and Explaining Cause of Changes in Stock Prices By Reading Annual Reports”, NeurIPS 2019 Workshop on Robust AI in Financial Services

Additional References of Figure 1

Relevance Score based

Gradient Based

Explaining Internal Nodes

Explaining though attention

Generating Explanations

3. [10-K Reports] , Y. Chun at el, “Predicting and Explaining Cause of Changes in Stock Prices By Reading Annual Reports”, NeurIPS 2019 Workshop on Robust AI in Financial Services

Local Linear Explanation

Decision Tree Explanator

Rule Based Explanator

Explaining with Examples

Explainable Model Composition

Learning Extracting Causalit

Extracting Models